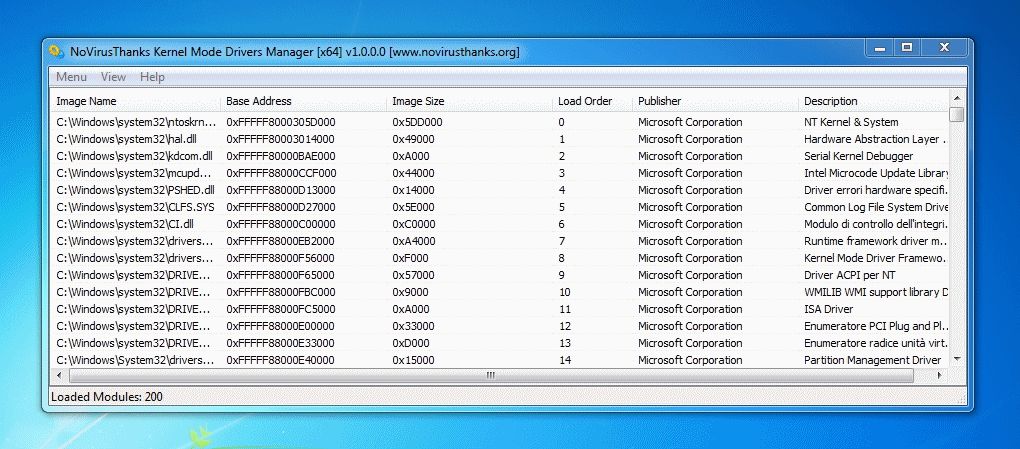

LXD allows for pretty specific GPU passthrough, the details can be found here.įirst let’s start with the most generic one, just allow access to all GPUs: lxc config device add cuda gpu gpuĭevice gpu added to lxc exec cuda - nvidia-smi Which is expected as LXD hasn’t been told to pass any GPU yet. Make sure that the latest NVIDIA driver is installed and running. NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Lxc exec cuda - apt install cuda-demo-suite-8-0 -no-install-recommendsĪt which point, you can run: lxc exec cuda - nvidia-smi Then install the CUDA demo tools in there: lxc exec cuda - wget

Results may vary when GPU Boost is enabled.įirst lets just create a regular Ubuntu 16.04 container: lxc launch ubuntu:16.04 cuda NOTE: The CUDA Samples are not meant for performance measurements. |=|Īnd can check that the CUDA tools work properly with: /usr/local/cuda-8.0/extras/demo_suite/bandwidthTest | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. After that, you should be able to confirm that your NVidia GPU is properly working with: nvidia-smi Then reboot the system to make sure everything is properly setup. Install the CUDA tools and drivers on the host: wget Those are very cheap, low performance GPUs, that have the advantage of existing in low-profile PCI cards that fit fine in one of my servers and don’t require extra power.įor production CUDA workloads, you’ll want something much better than this. The test system used below is a virtual machine with two NVidia GT 730 cards attached to it. NVidia is just what I happen to have around. This post focuses on NVidia and the CUDA toolkit specifically, but LXD’s passthrough feature should work with all other GPUs too.

With containers, rather than passing a raw PCI device and have the container deal with it (which it can’t), we instead have the host setup with all needed drivers and only pass the resulting device nodes to the container. LXD supports GPU passthrough but this is implemented in a very different way than what you would expect from a virtual machine.

0 kommentar(er)

0 kommentar(er)